The Vertalo API Standard

Interoperability and Transparency in Private Asset Management

Abstract

Complex legacy systems have been the data management backbone of Financial Institutions (“FIs”) for decades. The same mainframes and database systems implemented decades ago often remain in use today for data processing and management, and other back office operations across the enterprise. However, the ever-changing transactional and technological demands of clients requires innovation that increases the efficiency of internal and external data sharing, capabilities for which the infrastructure of many FIs was not built.

Dating back to the rise of “client/server” architecture, there has been a great deal of discussion about moving legacy workloads to alternative platforms (including the cloud). In light of the core strengths (low latency, high throughput, security, scalability etc.) and potential weaknesses (expensive maintenance, scarce development resources, siloed data etc.) of these legacy systems, the costs of refactoring and replatforming legacy systems may be prohibitive.

But as technology evolves and the demand for new features accelerates (driven by both internal and external parties), the lack of agility and interoperability from which many of these legacy systems suffer makes them less than ideal candidates for adapting to emerging technologies. Poor interoperability poses a serious challenge that complicates (or prevents) systems integration and forces duplicated, human-error-prone data entry as well as staffing specifically for data maintenance and reconciliation. These problems are especially acute within asset management and asset servicing (transfer agency, registrar services, etc.) divisions of an FI. Many of the largest FIs still handle asset management data with spreadsheets and email. FIs need to enable interoperability within their current infrastructure without incurring the full costs of uprooting legacy systems. While “rip and replace” may be appropriate in some circumstances, a better approach is to adopt a hybrid of legacy and modern applications, each optimized to do what they do best and to complement the other’s function.

Vertalo’s APIs were purpose-built to power the modern FI.

An API-based solution can solve a number of problems for FIs. APIs are sets of protocols that allow different software applications to communicate with each other. Vertalo’s GraphQL APIs are designed and architected as a framework for flexible system integrations. And we’ve significantly reduced integration complexity by architecting the Vertalo database as a Shared Ledger. The capabilities of Vertalo's APIs allow FIs to build their own Shared Ledger within their cloud architecture.

This whitepaper discusses FIs’ legacy systems and current data sharing processes in detail, and the resulting friction and inefficiencies experienced within private asset management and asset servicing. The whitepaper also discusses the lack of scalable solutions due to the absence of API standardization. It presents the architecture, differentiating factors, and use cases of Vertalo's APIs that make it the most scalable and cost-effective solution for FIs. Vertalo's APIs enable a true “Golden Source” for an FI’s data, providing improved data sharing processes and data reliability.

Introduction

Within FIs, private asset management stands out as a domain marked by its unique complexities and challenges. Unlike more liquid and standardized publicly-traded financial instruments, private assets, by their very nature, entail a level of opacity, individualized structuring, and limited but ambiguous regulatory oversight. The private asset landscape often results in significant friction when it comes to their management, with issues ranging from interoperability of legacy systems to evolving compliance demands. While FIs strive to reduce costs of asset management to mitigate management fee reduction from competitive margin compression, they continue to grapple with current (outdated) data management infrastructure, which increase costs year over year and prevent efficiency gains.

Problem: Legacy Systems Incompatibility

Legacy systems, broadly defined, refer to older IT systems, methods, and technologies that remain in use. Many financial institutions, especially the older and larger ones, rely on decades old systems for their daily operations. While these systems were state-of-the-art when implemented, they became problematic and limiting as newer technologies emerged. This is particularly relevant for private asset management, where modern tools and methodologies may significantly increase profitability and lower costs to lessen effects of margin compression.

Infrastructure Maintenance Costs

Older systems often run on outdated hardware which is expensive to maintain, repair, or replace. This leads to increasing costs in terms of energy, space, and upkeep. As the software becomes outdated, it often requires specialized knowledge or even bespoke patches and fixes to remain operational. This increases both direct costs (hiring specialists) and indirect costs (downtime, inefficiencies).

Integration Challenges

Legacy systems often don’t easily integrate with new systems. This means that as institutions adopt newer technologies, they are required to spend additional resources to bridge the old to the new. The lack of interoperability leads to fragmented views of assets and a lack of real-time data synchronization. This can be particularly problematic for private asset management, where timely, integrated insights are crucial for informed decision-making and payment accuracy.

Operational Inefficiencies

Modern systems built on API architectures can provide automated processes that improve operational efficiency. Legacy systems, by contrast, often involve more manual processes, which not only slow down operations but also introduce human error. System downtime and breakdowns, more frequent with legacy systems, can disrupt trading, reporting, and other essential functions.

Security Vulnerabilities

Outdated systems often have unpatched vulnerabilities which make them susceptible to cyber-attacks and costly downtime. This poses not just a financial risk, but also reputational risks. New regulations and compliance standards might not be met by these older systems, leading to potential legal and regulatory implications.

Limited Scalability

Legacy systems can constrain the growth of an institution. When transaction volumes rise or when new asset classes or investment strategies are adopted, these systems struggle to keep up with institutional demands, potentially leading to lost opportunities.

Delayed Time-to-Market

The private investment landscape changes over time, and systems must adapt and expand to address evolving regulations, competition, and financial innovation. When launching new financial products or adapting to market shifts, legacy systems can slow down the institution's response time. In the fast-paced world of finance, this can lead to missed opportunities.

Employee Training and Retention

Younger professionals, trained on modern systems, often find legacy systems frustrating and unintuitive. This can lead to increased training costs and may drive staff turnover as these professionals seek opportunities at more technologically advanced institutions. Legacy systems, while having served financial institutions well in the past, increasingly act as cost centers and barriers to efficiency in today's fast-evolving financial landscape. Particularly for private asset management, where precision, speed, and adaptability are crucial, reliance on these outdated systems can severely hinder performance and growth. Institutions, therefore, face the critical decision of upgrading and modernizing their platforms to stay competitive and efficient in the modern era.

Solution: API Standardization

In the traditionally siloed and complex environment of private asset management, APIs provide the interoperability essential for real-time decision-making, cost-savings, and technological innovation. Moreover, the modular nature of APIs allows for targeted integration, letting institutions choose specific functionalities, adapt to evolving regulatory requirements, and scale with market demands.

By creating a more transparent and efficient data-sharing mechanism, APIs pave the way for streamlined operations, reduced errors, and enhanced collaboration within the financial sector. In the realm of private asset management, they play a pivotal role in streamlining operations, enhancing data sharing, and cutting costs.

Real-time Data Access

Real-time data sharing via APIs ensures that asset managers have up-to-the-minute information on their portfolios. This reduces the need for manual data retrieval and updates, thereby cutting down on operational overhead and the chances of human error, leading to quicker, more informed decision-making, and enhanced returns.

Seamless Integration with Other Systems

APIs allow diverse software systems to communicate effortlessly. For FIs, this means that broker-dealers, KYC/AML, custodians, transfer agents, trading platforms, and many other tools and services can be integrated seamlessly, improving workflow efficiency. This reduces the costs and time associated with manual data transfer between non-integrated systems.

Scalability

As the volume of assets under management grows or as institutions diversify into new asset classes, APIs allow data processing to scale without the need for significant system overhauls. They provide an extensible infrastructure that can easily accommodate growth, preventing potential future migration costs.

Enhanced Security

Modern APIs come with built-in security protocols ensuring that data sharing meets industry standards for encryption and authentication. This reduces the potential costs associated with data breaches or non-compliance with data protection regulations.

Facilitation of Third-party Integrations

Asset managers can leverage specialized third-party tools and platforms via APIs, rather than building in-house solutions, which can be cost-intensive. APIs enable integration with advanced blockchain protocols, AI tools, or other emerging technologies, ensuring that asset managers can stay at the forefront of industry innovation at a reduced cost.

Operational Efficiency and Automation

Automated data transfer and processing, facilitated by APIs, can drastically reduce the operational costs associated with manual processes. Improved efficiency means that asset managers can focus on core tasks like investment strategy rather than getting bogged down by data management intricacies.

Transparency and Reporting

With APIs, asset managers can provide real-time portfolio insights and reporting to clients through client-facing platforms. This enhances transparency and client trust. This reduces the need for manual report generation, translating to cost and time savings.

Cost-effective Innovations

Open APIs and developer-friendly interfaces (such as GraphQL) encourage innovation by allowing third-party developers to create applications or tools tailored to specific needs. Leveraging partner-sourced innovation often yields more rapid and cost-effective system enhancement than in-house development.

APIs represent a transformative force in asset management as well as across an FI, providing pathways to improved efficiency, real-time data sharing, and cost reductions. By leveraging APIs, institutions can not only optimize their operations but also enhance client trust and engagement, all while maintaining security and compliance standards. As the digital transformation wave continues, the integration and utilization of APIs will undoubtedly become even more central to the successful management of private assets.

The Vertalo API Standard

Vertalo's APIs serve as a key technological bridge for enterprise infrastructure, providing a flexible and powerful gateway for developers and FIs.

Architecture

Vertalo's interface is GraphQL based, which makes it much more flexible than the traditional REST APIs. GraphQL’s journey began in 2012 at Facebook, and was developed as an internal project to address the challenges posed by the standard methods of querying data. As the complexity of Facebook’s data needs grew, there was a pressing need for a more efficient, flexible and powerful system. Traditional REST API approaches often resulted in over-fetching of data, leading to inefficiencies and challenges in maintaining a consistent user experience across platforms.

GraphQL and REST are both prevalent technologies in the world of APIs, each with its distinct advantages. However, when comparing the benefits of GraphQL over REST, several points come to the fore. Firstly, GraphQL empowers clients to precisely define the structure of the response data they need, which minimizes over-fetching or under-fetching of data. This specificity can result in more efficient network requests and potentially faster page loads. Secondly, GraphQL operates via a single endpoint, streamlining the interaction between client and server and simplifying version management, in contrast to REST, which requires multiple endpoints for different resources. Additionally, with its strongly-typed schema, GraphQL ensures that API calls adhere to a predefined structure, reducing runtime failures and enhancing the reliability of data interactions. Lastly, the introspective nature of GraphQL allows developers to explore the schema, making documentation and tooling more interactive and aiding in a smoother development experience.

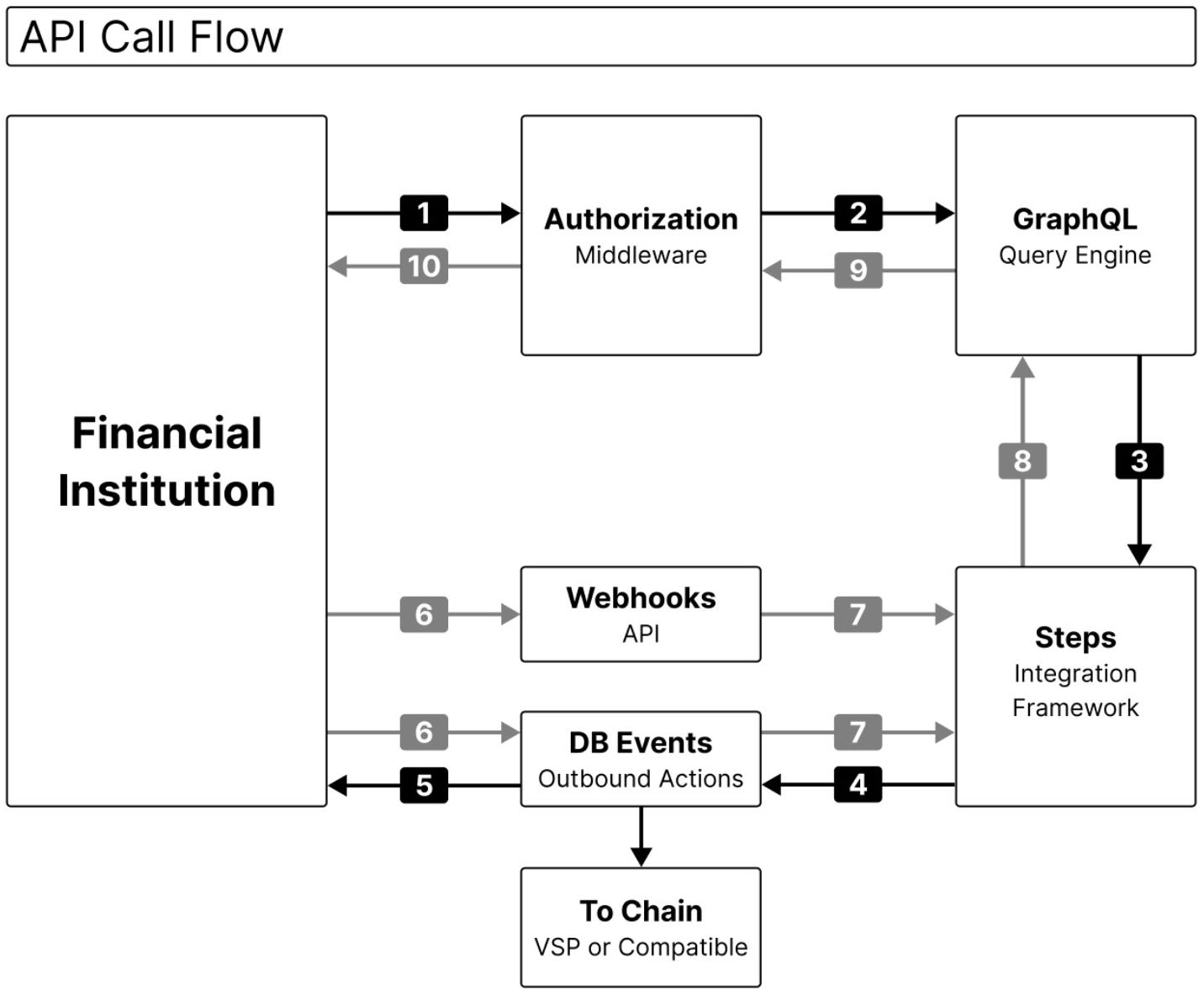

API Call Flow

The architecture of a Vertalo API call is illustrated below:

At the core of Vertalo’s API architecture lies a well-structured and seamless API-call process designed to ensure efficiency, fluid developer experience, and security. When an API call is initiated, it is first directed to our Auth application. This phase is crucial as it serves as the gateway for our interface. Essential data is fetched from the session storage, ensuring the API call is authenticated. This initial step not only guarantees the integrity and security of the data being accessed but also ensures that only valid requests are processed further down the pipeline.

Upon successful authentication and authorization, the GraphQL query engine comes into play and serves as an intermediary, facilitating communication between the API call and our primary data processing framework, referred to as “Steps.” Steps is a robust integration framework that bridges between the API and the database events system. It interprets the specifics of the API call, prompting the database events system to either generate or retrieve the necessary data.

Once the data is procured, the process operates in reverse using the Webhooks API to signal completion of an asynchronous request and relay information back to the 3rd party or the initiating FI. This communication signifies the successful completion of the asynchronous API call, ensuring transparency and providing real-time updates for all webhook callback recipients. Unlike traditional, synchronous operations where processes are executed sequentially and can result in system bottlenecks, our approach is designed to maximize efficiency. When an operation or call is initiated via our APIs, it does not require constant engagement or waiting. Instead, the process runs in the background, allowing other tasks to be carried out concurrently.

Database Model

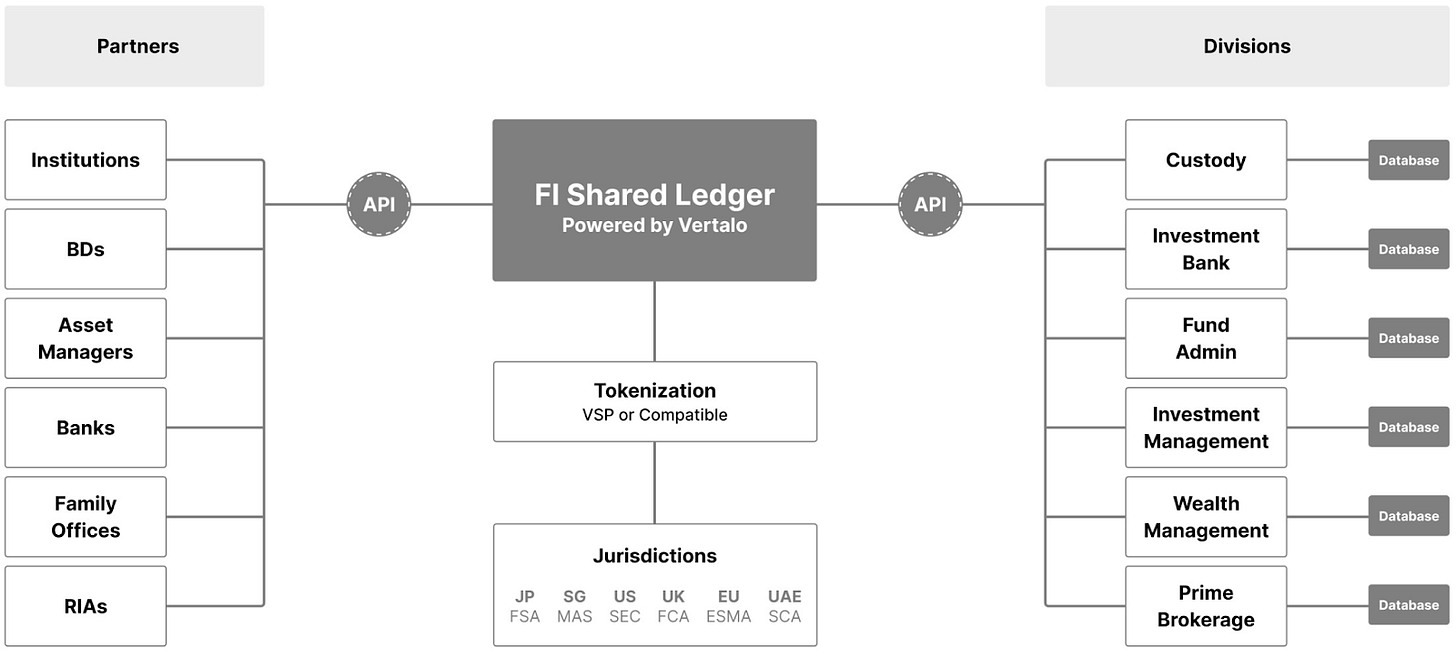

The Vertalo database architecture is illustrated below:

The core of Vertalo’s database is a Shared Ledger. Vertalo’s use of distinct ledgers for different data types underscores the importance of specialized storage in ensuring data integrity and optimization. By segmenting the data into purpose-built ledgers – Transfer Agent ledger for Transfer Agent data, ATS ledger for ATS data, and the General ledger (“Ledger”) for a comprehensive data history – Vertalo ensures that each data type receives a structure that aligns with its unique requirements. This categorization not only increases efficiency but also aids in maintaining data clarity and integrity. Data retrieval, auditing, and management become more straightforward when each ledger is optimized for its specific data type. This approach also facilitates separation of concerns, reduces the possibility of data contamination and leaks by cleanly segregating distinct data types with different access criteria.

Tying together the different ledgers is a common underlying Blockchain Address and Token ID (“BCAT”) database system. This unifying database acts as the backbone for all ledgers, ensuring that separate data types can be connected and interrelated when necessary. Operations that rely on data from multiple ledgers use the BCAT database to ensure that reads and writes across ledgers are fully coherent. The combination of distinct data ledgers and BCAT supports flexible configuration and integration with multiple sources and multiple consumers of ledger data. This amalgamation of specialization and unification lies at the heart of the architecture, and supports integration and interoperability without compromise.

In Vertalo’s database system, we’ve opted for blockchain-centric terminology to represent key concepts. Specifically, we use the term “token" to represent an “entity” and “Blockchain Address” to denote a “position in entity”. While these terms align with blockchain idioms and resonate with those familiar with the domain, we recognize that they might introduce ambiguity for developers and stakeholders who lack experience or exposure to DLT. It’s crucial to understand that “token” in this context is not merely a unit of value or cryptographic asset, but a representation of a distinct entity within our system. Similarly, the BCAT does not merely represent a transactional endpoint but indicates a certain position related to the aforementioned entity. This terminology links traditional ledger architectures with the of DLT, and indicates how to integrate DLT concepts into private asset management. We emphasize the importance of clarifying these terms for all users to ensure transparency and comprehension.

Vertalo’s platform was architected with a principle of extensibility at its core. The platform offers an intuitive interface and robust backend mechanisms that simplify the creation of new, distinct ledgers. This capability is more than just a feature—it's a strategic foresight. By ensuring that the system remains open for extension, we position ourselves as a future-proof solution, ready to accommodate emerging data requirements, integrations, and technological advancements. In a domain where the volume, variety, and velocity of data can rapidly expand, such flexibility is indispensable. By promoting easy ledger creation and extension, Vertalo not only addresses the present needs of its users but also anticipates and prepares for future complexities down the road.

Capabilities and Differentiators

Integration-First Approach

Vertalo applies the same approach to integrations internally as it does with partners. We essentially treat Vertalo as a third-party platform, integrate ourselves into our own Shared Ledger, and then provide an easy-to-use API on top of that integration. Our self-integration as a third-party provides invaluable perspective in the increasingly complex landscape of digital integration solutions. Positioning ourselves in this manner helps us to abstract away the intricacies of the underlying database, thereby streamlining the adoption process for potential users. This abstraction is especially crucial in sectors where users may lack deep technical expertise or the bandwidth to delve into the nitty-gritty of new platforms. Instead of requiring stakeholders to engage with the shared ledger's intricate details, they can interact with it indirectly, through the more user-friendly layer of an API.

Adopters can quickly capitalize on the advantages of the API without the daunting task of mastering a new shared-ledger paradigm. Vertalo's APIs are attuned to the demands of FIs, where rapid integration and ease of use are not just bonuses but expectations. By providing a seamless bridge to our Shared Ledgers, we effectively remove the barriers to entry, paving the way for simple and scalable integration as well as more widespread utilization of the platform and the innovative capabilities it offers.

The extensibility of Vertalo's APIs results from forward-thinking design and an emphasis on adaptability. In the rapidly evolving landscape of financial technology, the ability to extend and adapt systems to cater to emerging needs is paramount. An extensible API addresses not only current requirements, but also anticipated future demand. For FIs, this extensibility translates to a reduced need for overhauls or shifts to new systems as market dynamics change. Instead, the APIs can evolve, integrate new features, and adjust to new scenarios, providing a more sustainable and long-term technological foundation.

Extensibility of Vertalo’s APIs highlight potential cost-saving advantages for FIs. Implementing new systems or making significant changes to existing ones can be cumbersome and an expensive undertaking for any organization. However, with APIs designed for extensibility and a user-friendly interface, FIs can more easily introduce new functionality as required. This flexibility keeps an institution at the forefront of FinTech innovation. In a sector where competitive edge often depends on technological adeptness and innovation, the flexibility of Vertalo’s APIs is a significant differentiator, enabling FIs to stay agile and responsive to market needs and changes.

Developer-Friendly Tools

When developing APIs for both internal and external consumption, developer experience is a crucial consideration. To this end, Vertalo’s GraphQL implementation exposes a single endpoint that can accept complex queries and mutations based on a rich, uniform query language.

Vertalo's interface enables "rolled up" API calls, which can be interpreted as a method of bundling or aggregating multiple operations or data requests into a single call. In the context of financial data, where vast amounts of interconnected and time-sensitive information are regularly processed, such rolled-up operations streamline data handling, reduce network overhead, and ensure more efficient utilization of resources. Bundling with rolled-up calls makes batch processes, like end-of-day settlements or bulk data retrieval, more efficient and less error prone.

Vertalo’s APIs also offer create-or-get calls, which provide developers a convenient and consistent way to insert data if not present or retrieve it if already present. This developer-friendly convention simplifies common data-exchange operations, making integrations easier and more robust.

From a developer's perspective, these features create a more intuitive and efficient coding experience. Instead of wrestling with the API's limitations or crafting workarounds, developers can focus on building and extending robust financial applications that leverage the full spectrum of Vertalo's capabilities. Given the critical nature of financial data, where errors can have severe repercussions, an API that's easier to work with becomes a valuable tool, enhancing accuracy, speed, and overall product reliability.

Role-Based Data Access

Before making API calls, a Vertalo API user must authenticate and then authorize as a defined role. Subsequent API calls operate as the authorized role. Access to data is restricted by role below the API level, so that all API calls are subject to uniform role-based constraints. This ensures that all API calls respect the same data-access boundaries.

Access restrictions allow users to see data only if they are party to that data. This system maps well onto financial data where most data are joint productions of two parties under a contractual relationship. Single- and multi-party data also work well in this access-control system. Applying the restrictions below the API level makes them apply automatically to new APIs, which reduces the complexity of API extensions.

Implementation and Deployment

Vertalo's platform can be deployed in a cloud environment hosted by Vertalo or a client. The Vertalo-hosted environment minimizes start-up costs, and enables rapid development of API integrations with a minimum of overhead. The client-hosted environment places the platform fully inside of the client’s security perimeter, simplifying integration with existing systems, thereby accelerating digital transformation along with asset tokenization. Clients have the option of using both models: Vertalo hosting for rapid development, Client hosting for production integration.

The API-based integration facility of Vertalo's platform is a notable advantage in today's rapidly evolving and security conscious financial landscape. Over time most FIs have built intricate tech infrastructures that support a vast array of services and business units. A complete overhaul of such systems to accommodate new technological advancements is rarely practical or cost-effective. Vertalo's API-based integration capability bridges the gap between traditional financial infrastructures and the emerging world of digital and tokenized assets, ensuring that FIs can remain on the cutting edge of innovation without the disruptive, risky, and expensive process of entirely revamping their platforms.

The flexible choice of operating environment for Vertalo's platform is especially critical in the financial sector where jurisdictional compliance, data integrity, and security are non-negotiable requirements. Working within a known and reliable security system lets FIs confidently expand into tokenized assets without compromising on the stringent security standards they must uphold.

By reducing the difficulty of integrating with existing security environments and systems, Vertalo’s platform lets clients take full advantage of tokenization and DLT while minimizing the risk and disruption to their existing operations. Vertalo's APIs usher FIs into a new digital era where asset liquidity, transparency, and security are greatly enhanced with these new technologies.

Use Case: Shared Ledgers Facilitate Network Development

Our Shared Ledger is a technology implementation that reduces time and costs through increased efficiency, speed, and reliability of data handling within our divisions. The underlying dependency is Vertalo's APIs.

Networks are activated with data shared through APIs. The Shared Ledger connects (historically) incompatible systems of relevant parties within our financial infrastructure, using Vertalo APIs.

Golden Source

The "Shared Ledger" concept as described holds significant promise for FIs looking to modernize their operations. At its core, a shared ledger serves as a “golden source” of information that can be accessed and updated in real-time by multiple parties. This common access ensures that data is not siloed within a single department or division but is instead accessible and actionable across the breadth of the institution. The immediate implication of such an approach is a drastic reduction in the time typically spent on data retrieval and reconciliation. When departments within an FI operate on disparate systems or databases, significant man-hours are expended in cross-referencing, verifying, transferring, and reconciling records. The Shared Ledger negates this need by presenting a singular, unified, and real-time source of truth, the golden source.

The API-based architecture accentuates the ledger's versatility and scalability. Vertalo's APIs facilitate seamless integration of data into the Shared Ledger across various internal platforms, tools, and software employed by the FI. This makes the ledger an integral part of the FI's technological ecosystem. The benefits are twofold: firstly, integration via API means that the adoption of the Shared Ledger can leverage existing systems without requiring a massive overhaul. It integrates with minimal friction. Secondly, by functioning as the golden source in the data flow within the FI, the API-enhanced Shared Ledger amplifies efficiency gains across all integrated systems, further driving down operational costs and bolstering the speed and reliability of data handling.

References

https://graphql.org/learn/schema/

https://www.sec.gov/news/press-release/2023-149#

https://blog.fispan.com/what-are-apis-and-why-are-they-important-to-the-banking-industry

https://www.efinancialcareers.com/news/2021/05/cobol-jobs-banks

https://www.howtographql.com/basics/3-big-picture/

https://vertalo.com/whitepapers

https://www.bcg.com/publications/2023/technology-and-operations-in-wealth-and-asset-management

https://deloitte.wsj.com/cio/connect-and-extend-mainframe-modernization-hits-its-stride-ae5ede9c